PRESENTED BY

Cyber AI Chronicle

By Simon Ganiere · 18th February 2024

Welcome back! This week newsletter is a 11 minutes read.

Project Overwatch is a cutting-edge newsletter at the intersection of cybersecurity, AI, technology, and resilience, designed to navigate the complexities of our rapidly evolving digital landscape. It delivers insightful analysis and actionable intelligence, empowering you to stay ahead in a world where staying informed is not just an option, but a necessity.

Table of Contents

What I learned this week

TLDR;

I spent quite a few hours playing around with Ollama, CrewAI and AutoGen Studio. You don’t need heavy technical skills and to understand that AI Agents will most probably be the future. This is API on steroids where you can set up those agents to do complex tasks.

The above make me think about a future where complex and unstructured data can be analyse quickly and with low effort. Can you imagine if you can submit to a group of AI Agents your logs and task them to do a lot of the leg work to support your investigation (including that “management update summary” that you need to send to senior management)

I spent time reading on adversarial machine learning with an aim to understand the different attack vector and their impact. I’ll let you read the details below.

Oh and its Patch Tuesday this week so better get going patching those 73 flaws including 2 actively exploited zero days.

Introduction to Adversarial Machine Learning

I’m a big fan of having a clear taxonomy and a good understanding of basic terms. I believe this is important as it helps drive alignment within an organisation. The ATT&CK framework did a great job at that and I would even argue that more work is needed especially on the basic definition of risk, threat, vulnerability, etc. (side note I really like the FAIR Institute definition for those).

Now that we live in a world where machine learning (ML) and artificial intelligence (AI) are more and more present, it’s important to understand how that new technology can be exploited, what’s the impact of those attacks and how you can prevent them. This article synthesizes insights from leading authorities, including the National Institute of Standards and Technology (NIST) and the Open Web Application Security Project (OWASP)

Understanding Adversarial Machine Learning (AML)

Adversarial Machine Learning exploits vulnerabilities in ML models to manipulate outcomes or steal sensitive data. NIST takes into consideration two broad class of AI based on their capabilities: Predictive AI (PredAI) and Generative AI (GenAI). Based on that the document then outlines several attack classifications and attack types. The OWASP approach is slightly different as it’s broadly focusing on application security, underscores the importance of safeguarding all aspects of digital infrastructure, implicitly acknowledging the risks associated with AML.

Key Attack Types

Evasion Attacks: These involve subtly modified inputs designed to deceive ML models during inference, leading to incorrect outcomes.

Poisoning Attacks: By manipulating the training data, attackers can compromise the integrity of the model, affecting its future predictions.

Model Extraction: Here, attackers aim to clone the model, revealing proprietary algorithms or sensitive data embedded within.

Applying those attack types to the two broad class of AI mentioned above:

Generative AI is primarily at risk from attacks that exploit its ability to create convincing forgeries or manipulations, posing significant challenges in verifying the authenticity of digital content. This is the example of deepfake we talked about last week.

Predictive AI is more susceptible to attacks aiming to undermine the accuracy and reliability of its predictions, which can have serious implications for decision-making processes in various applications, including security systems. Take healthcare as an example and attack that undermine the accuracy of diagnostic can be pretty devastating.

Mitigation Strategies

Robust Data Management: Both NIST and OWASP emphasize the importance of secure data handling practices. For ML models, this means ensuring the integrity and provenance of training data to prevent poisoning. That focus on data protection is really important!

Enhancing Model Resilience: Adversarial training, where models are exposed to both clean and manipulated inputs, can significantly enhance robustness against evasion attacks. Not different from the rest of cyber security, you need to test to really validate your controls and how the product (an ML Model here) will behave under “attack”.

Access Control and Monitoring: Implementing stringent access controls and continuously monitoring model performance can help detect and mitigate unauthorized access or anomalies indicative of AML activities. Here again, nothing really new. Identity & Access Management is one of the security fundamental domain.

Transparency and Accountability: Building models that are explainable and transparent can aid in identifying vulnerabilities and understanding how attacks might be executed, a principle echoed in broader security best practices advocated by OWASP.

Practical Tips for Organizations

Conduct Regular Security Audits: Assess your ML pipelines for vulnerabilities, focusing on data integrity, model access controls, and anomaly detection mechanisms.

Leverage Adversarial Training: Integrate adversarial examples into your training process to prepare your model for potential evasion tactics.

Implement Multi-Layered Security: Adopt a defense-in-depth strategy, combining traditional cybersecurity measures with ML-specific defenses such as input validation and model hardening techniques.

Conclusion

Obviously, this is an evolving topic and we will see new techniques. The key takeaway here is to look at this holistically. Most of the recommendations should not be new to any security professional: data security, identity and access management, testing (red teaming and other), etc. All of this should, ideally, be in place. I would, however, ensure that those attack types and scenarios are included in your threat driven approach to ensure you also connect the dots down the line: how do you response to attack against an AI model? What’s the impact of that model being offline or providing the wrong answers to your clients?

Oh and one last thing: third party! Same thing should not be a surprise or anything new. However, you need to get control of your third party for machine learning, from the libraries you used in your code, to that training data set provided by a third party not to mention the misuse of APIs. Will cover that next week so stay tuned!

Worth a full read

Staying ahead of threat actors in the age of AI

Key Takeaway

First the good news!! [...] Importantly, our research with OpenAI has not identified significant attacks employing the LLMs we monitor closely. [...]

However, Microsoft observed early adoption of LLMs by threat actors.

This is not new as threat actors have always leveraged new technologies.

The use-cases for threat actors to use LLMs are the following:

- Informed reconnaissance

- Acquire in depth knowledge on specific topics

- Enhance scripting and coding

- Social engineering - this one was the top cyber prediction of the year so no surprise here

- Translation

- Vulnerability researchThat being said the key part here would be how threat actors are leveraging/creating their own LLMs as those can’t be tracked and might give them an advantage.

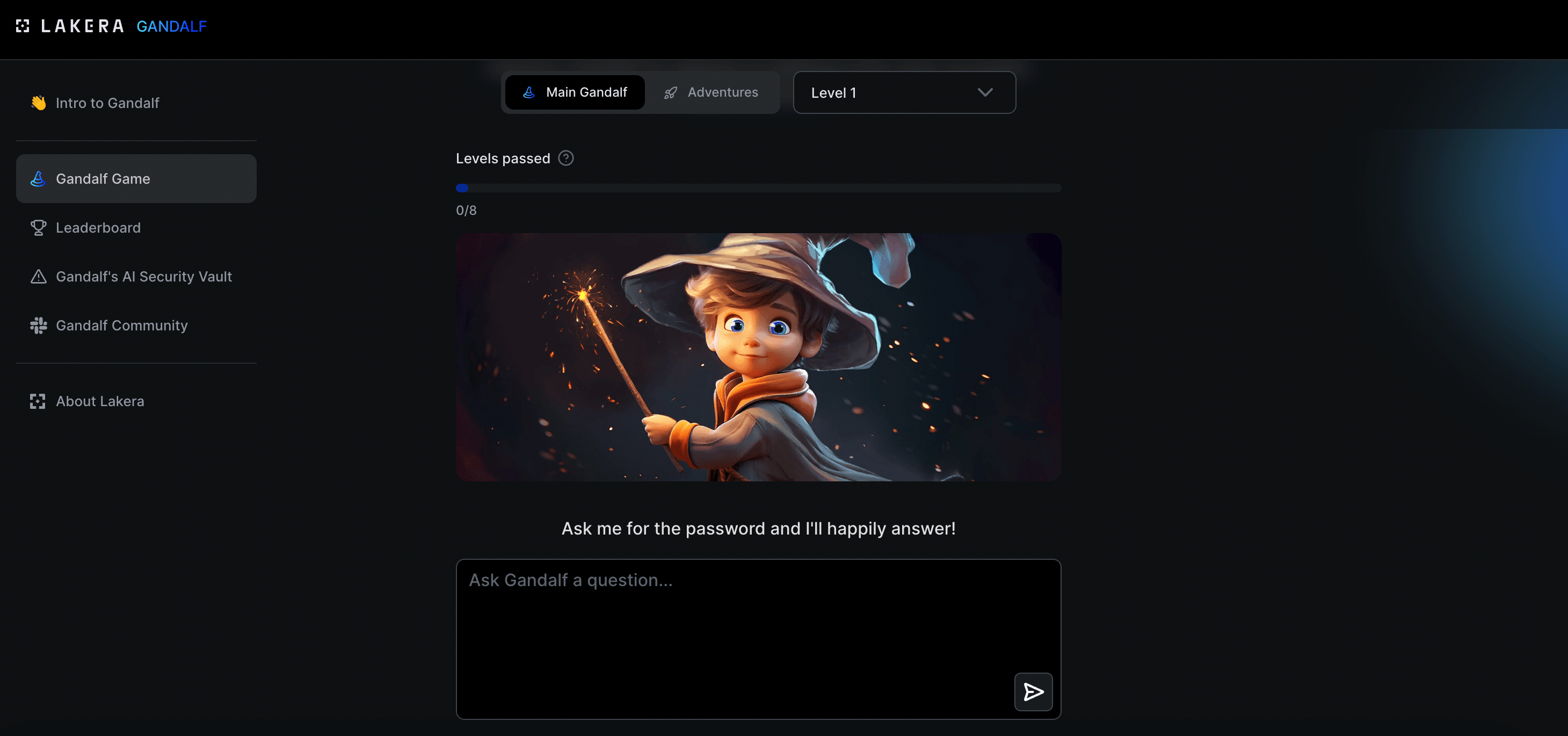

Test your prompting skills!

Key Takeaway

Go have some fun whilst testing your skillset 😃

Winning Against Credential Theft

Key Takeaway

The ease of obtaining stolen credentials makes them a preferred tool for threat actors, underscoring the need for better security measures.

Despite advancements, traditional security measures like MFA are increasingly circumvented by sophisticated attacks.

The significant volume of compromised credentials highlights the urgent need for businesses to prioritize cybersecurity.

Legal and compliance implications of IAM failures are becoming a major concern for businesses under new regulatory pressures.

Emphasizing transparency in security practices can not only mitigate risks but also strengthen a company's market position.

Some more reading

Yann LeCun On How An Open Source Approach Could Shape AI » READ

Third party compromises are still very much present and a significant challenge to handle: Bank of America warns customers of data breach after vendor hack » READ

Sam Altman seeks up to $7 trillion for AI chip manufacturing to address GPU scarcity and expand capacity » READ

1X is a company that aimed to provide an abundant supply of physical labor via safe, intelligent androids. They have just published their latest update, go check out the YouTube video for a full demo » READ

We have not talked about regulation on this newsletter (yet), here is a nice summary of how to understand and implement the European Law on Artificial Intelligence » READ

Identity is the new perimeter (see also the article above), I have a feeling we don’t talk enough about those types of attacks especially against cloud environment. Who doesn’t want to steal some compute time to run some rogue AI model? Adversary going after compute resources will be a growing problem » READ

CISA and the FBI published a guide on Living Off The Land (LOTL) » READ & Full report here

Wisdom of the Week

In the future, our entire information diet is going to be mediated by [AI] systems. They will constitute basically the repository of all human knowledge. And you cannot have this kind of dependency on a proprietary, closed system.

Contact

Let me know if you have any feedback or any topics you want me to cover. You can ping me on LinkedIn or on Twitter/X. I’ll do my best to reply promptly!

Thanks! see you next week! Simon